This week we are going to be looking into binary min-heaps, and how they can be used to find the k smallest elements within an given array of numbers and a given k value. As always, feel free to leave a comment and lets get right into this.

What is a Binary (min) Heap?

A binary heap is a data structure that takes the form of a binary tree. A binary heap has two different properties that defines what it is: a structure property and a heap-order property.

Structure wise, a binary heap takes the form of a complete binary tree. The heap-order property depends on the kind of binary heap we want to implement.

Since we are going to using a binary min-heap in our program, our heap-order property will be as follows: the root of the tree (or the subtree) is the smallest node in the tree (or subtree). Basically, the smallest value in the entire is at the root of the tree.

We will be able to take advantage of this heap-order property when finding the k smallest elements in a given array. In our implementation, we will be returning an array with the k smallest elements at the k greatest indices (for example we have an array [1, 2, 3, 4, 5, 6] and k = 2, then the result array would be [3, 4, 5, 6, 2, 1]).

The Implementation

In this lab, we will be implementing to kinds of template classes: a Heap class and a SpecialHeap class. Our Heap class will be our base binary min-heap class, while the SpecialHeap class inherits our Heap class. SpecialHeap will contain one unique method called get_k_smallest(), which will find the k smallest elements the array and format it as mentioned in the last paragraph of the "What is a Binary (min) Heap?" section.

Our Heap class will represent a complete binary tree in the form of a vector. This is possible by having our root node be at index 1 of the vector, our parent nodes being at k/2, our left child at 2k, and our right child at 2k+1. This can be seen below:

The reason why we are using a vector in our implementation of a binary min-heap instead of a binary tree is because it is simpler than implementing a binary tree, while also mimicking what a binary tree does. We can not use a binary search tree either because the heap-order property will violate the binary search tree order property.

Our vector in our Heap class will have a size of (2^N), where N is some value, so we have enough room to store a complete binary tree. Our vector will be initialized with the appropriate size in our default and parameterized constructor. Within our Heap class we will also have a "size" data member, which will keep track of the number of items that exist within our heap.

Our Heap class will have two private methods called _heapify() and _percolate_down(). _percolate_down() takes a vector index as input and ensures that the given element at the given index is in the correct place in the heap according to the heap-order property. _heapify() will be used in our parameterized constructor, and it ensures that an unordered heap becomes ordered. This is done by calling _percolate_down() starting from index N/2 and ending at 0.

We have two constructors: one default constructor and one parameterized constructor that takes in a vector as input. The default constructor initializes our internal vector to a default value of 128 (because of the 2^N requirement) and our size as 0. The parameterized constructor will set the size data member to the size of the input vector, set our internal vector to have a size of the input vector + 1, copy all the items from the input vector into our internal vector, and then call _heapify() to order our heap.

In both of the constructors, since the root starts at position 1, we fill position 0 with a sentinel value. The sentinel value is the lowest possible value for the data type that our template class is initialized for.

The rest of the methods are as follows:

- is_empty(): Tells user if the heap is empty

- insert(): Inserts an element into the heap

- delete_min(): Deletes the smallest element in the heap (basically the root)

- peek_min(): Returns the smallest element from the heap

- to_string(): Returns a string representation of the heap.

Now for our SpecialHeap. Since our SpecialHeap class inherits from our Heap class, we don't really have much to implement. All we have to do is implement our get_k_smallest() method, which is pretty easy to implement. Within our get_k_smallest() method, we do the following steps k times:

- Get the smallest element through peek_min().

- Delete the smallest element through delete_min().

- Copy the smallest element we have to the just vacated cell of the heap (index size + 1).

Once we are done with this, we set the size to 0 and return our internal heap vector. Now we are able to return an array (or vector in this case) where the k smallest elements are at the k greatest indices.

Lab Questions

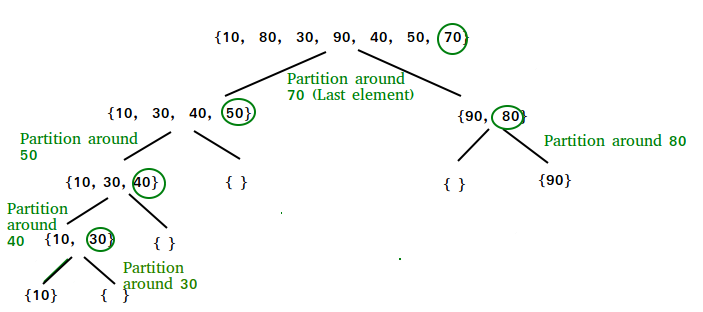

Q: Why you would use Quickselect (or Quicksort) at all instead of simply using a special heap (or Heapsort)?

A: I would say you would use Quickselect (or Quicksort) instead of using a special heap (or Heapsort) because it uses less memory while still achieving similar times alongside Heapsort. Although both our SpecialHeap and our Quicksort method from last week both do in-place operations, SpecialHeap uses more memory because it has to store the heap, data members, and class methods, while Quicksort can be implemented using two methods (as seen from last week's post).

Q: Why no use a balanced binary search tree for your backing store? Which of our original requirements (on page 1) would this implementation satisfy? What is the tradeoff, if any?

A: We do not use a balanced binary search tree because our heap-order property will conflict with the order property of a binary search tree. If it were possible to use this kind of implementation would probably satisfy the run-time complexity requirement of achieving O(k log N), but not the space requirement of O(1). The tradeoff would probably be same speed as the vector implementation, but uses more memory.

Conclusion

As we can see, a binary min-heap allows us to find the k smallest elements in an array, given a value of k. Although we only talked about binary min-heaps, we can modify our Heap class in such a way that allows for the create of binary max-heaps, which have a heap-order property that states the root of a tree (or subtree) is the biggest node in the tree (or subtree). I hope you enjoyed this week's post. Feel free to leave a comment and I will see you next week!